Transactional Outbox: A Place Where Microservices Architecture And Post-Office Meets

The analogy might be far-fetched, but I’ll run with it.

Everyone past twenty years old today probably sent a paper letter once in his life.

Ah, the nostalgic days of carefully crafting messages, sealing them in envelopes, and trusting the postal system to deliver our thoughts and emotions.

Without emails, phone, or TikTok, you had to wait days for an answer.

Don’t worry. You haven't misclicked. This article is about microservice architecture. Can’t you already see the connection between event queues and mailboxes? No?

In a world where microservices are one of the most written subjects in software architecture, some communication patterns are lesser known than their successful brothers 2PC and SAGA.

In today’s article, I want to discuss one of those patterns to ensure data consistency in a microservices architecture: Transactional Outbox.

I’ve been a tech lead working with microservices for a few years and only discovered this pattern recently.

This article is a dive deep into the subject; I’ve never actually implemented it, but when I learned about it, the first thing that came to mind was that post-office analogy I’m about to share — the fact that both my parents worked at the post office might have played a role in that.

Event-driven microservices introduction.

To properly understand the issues that the Outbox pattern fixes, you first need to have a basic understanding of what an event-driven microservice architecture is and the struggles that come with it.

In a microservices architecture, your services will need to share data. It can be through REST HTTP calls for synchronous communication, but to ensure resiliency, the preferred way is through events — if eventual consistency is correct in your use case.

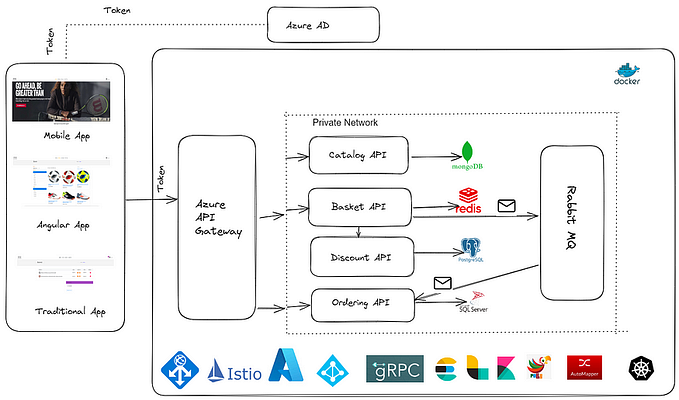

To do so, you would use a message broker like RabbitMQ or Kafka that your services listen to and react to based on the events.

Let’s take the online post office service as an example. It might sound like I have a weird attachment to the post office.

A revolution in the post-office world came a few years back (in France, at least); you can now send letters online. First, you select a PDF on your computer, then pay, and the post office will print it and send it to the given location.

The architecture would look something like that.

The process in the order service would be as follows:

- First, we must insert the new order in the database

- and then send the event to the broker to notify the other services.

That’s a dual write, where two writes are necessary to keep a consistent state.

A few things can go wrong in this process; the message broker can crash after the insert in the database is validated. Or there could be a network failure while sending the message. Both would leave the application in an inconsistent state.

How can we eliminate the dual write and run an atomic insert that handles both the order creation and the event creation simultaneously?

That’s where the Transactional Outbox Pattern comes in.

The post office analogy

What does the post office have to do with all of this…

Not so long ago, before smartphones dominated the world, humans communicated by sending each other letters — some still do.

However, it’s now more like a nice gesture during holidays than an efficacious communication method.

If you wanted to send a message to ask someone for a favor, for example, you wrote a letter and post-it using the post office. The post office took your letter and ensured it arrived at its destination.

The receiver just had to check his letterbox periodically to see if he had a new letter, and once he found your letter, he would decide if he liked you enough to respond or throw it in the trash and never think of you ever again!

Here you have it: the transactional outbox pattern in real life. I swear I’m not crazy, but I did warn you that it was far-fetched.

Transactional Outbox Pattern

Analogies are fun, but let’s jump into the real explanation.

The problem we are trying to fix is the atomicity between the insert in the order table and the sent event to the broker. We don’t want dual writes; those are bad.

To fix it, we will use the power of database transactions.

What’s a database transaction?

A transaction is a grouping of queries as a single unit. Either all of them succeed, or none of them are played.

You first begin the transaction, add all the queries you want to run inside and finish by committing. If any of the queries fails, a rollback is launched for the queries that ran before.

Here is a simple example.

-- Start the transaction

BEGIN TRANSACTION;

DECLARE @orderID INT = 101;

DECLARE @paymentAmount DECIMAL(10, 2) = 50;

-- Step 1: Create the order

INSERT INTO Orders (ID, Amount, Date)

VALUES (@orderID, @paymentAmount, GETDATE());

-- Step 2: Record the payment

INSERT INTO Payments (OrderID, PaymentAmount, PaymentDate)

VALUES (@orderID, @paymentAmount, GETDATE());

-- If everything is successful, commit the transaction

COMMIT;How can transactions be used to fix our issue?

In the Transactional Outbox Pattern, we have two new components:

- An intermediary table called “Outbox table”.

- A middleman service called the publisher or message relay.

The outbox table will store the events previously sent to the message broker directly.

When receiving the order, it will start a transaction to insert the order in the order table and the event into the outbox table simultaneously. This prevents data inconsistencies between orders and events in case of an outage (network failure, broker crashing, etc.)

In the post office analogy, that’s the sender! He writes the letter and delivers the message to the post office’s mailbox (outbox table). The message will stay in the mailbox until the mailman comes to pick it up.

The publisher, another service outside of the order service, will read the outbox table periodically and publish the event in a message broker.

The publisher will delete the event in the outbox table only when the message is successfully published in the message broker.

The publisher is the mailman, taking the message from the mailbox and putting it in your friend's mailbox.

Finally, the Shipping Service here is the recipient; he only gets your letter if he checks his mailbox and decides what he wants to do with it.

Advantages

- You are sure the message will reach the broker. The publisher can be down for hours; the message will remain when it returns online.

- You also benefit from an ACID transaction with clean rollbacks in case of an issue during the database insert.

Disadvantages

- There is a possibility the messages are sent multiple times. If the database deletes operation from the publisher service fails, the event from the outbox table may be read more than once. The receiving services (shipping, payment, etc.) must be idempotent to prevent this issue's impact.

Publisher implementation details

There are multiple ways of implementing the publisher service.

The first is to create a separate microservice to pull the outbox table periodically and send the event found in the table to the message broker.

This implementation is easy, but it comes with downsides.

Based on the load of your application, the service will have to scale horizontally. This implementation might bring duplicate event issues if two services query the table simultaneously. Making the required idempotency of receiving services, which we discussed earlier, even more important.

If you keep a single instance of the service, it will be a bottleneck

Another way of implementing it is through a log-based data change capture system. Databases provide logs for every event on the database, and it’s possible to implement reactive action on those logs.

For example, there are bin logs for MySQL, or in MongoDB, you have OpLogs.

You can implement a solution to listen to the bin logs on the outbox table and automatically send events to a message broker like Kafka or RabbitMQ. Services like Debezium already have connectors to make plugging your outbox table into the message broker easier.

You can read more about the Debezium implementation in this amazing post by Gunnar Morling.

The outbox pattern is a great way to make your architecture resilient, but it brings implementation overhead.

As with everything in IT, it comes with a cost. You have to decide if the data you’re dealing with is sensible enough that you can’t accept an event lost in the rare case of an outage.

It’s a tradeoff between development time and resiliency.

As for my analogy, I know it does not really work because you usually write the letter at your home and then go outside to post it.

It would work if the postman came inside your home to pick up the letter just as you finished writing it, but if you need to go outside to post it, it’s just like a dual write. A lot can go wrong in the outside world…

Thank you for reading until the end; here is a list of great content I read to prepare this article: